Ok, we’re going to get a little complex in this article, but stick with me because it’s worth it.

And I’ll make it easy to understand.

So you’ve almost certainly heard of AI = Artificial Intelligence.

You have to be careful what font you use when you type that because I sent a message on my iPhone to someone and I wrote “AI” but it didn’t have the serifs on the I so it looked like a lower case “l” and the person wrote back “Al who?”

No, not Al.

AI.

Artificial Intelligence.

Specifically, you’ve probably heard of the most popular AI chatbot out there called ChatGPT.

Honestly, it’s incredible what it can do.

But also starting to become a bit scary.

ChatGPT is what’s known as a LLM = Large Language Model.

Basically, they just fed all of the Internet an all of written history into the Chatbot and then started training it to recognize patterns, etc.

Imagine if you could read every book ever written and then start making connections between them.

Oh, and then imagine you could work at the speed of light (computer) and work all day long with no need for sleep or resting or slowing down.

That’s ChatGPT.

Here are a couple other terms you need to know:

AGI = Artificial General Intelligence.

ASI = Artificial Super Intelligence.

There’s a debate out there about if/when we will reach AGI.

Basically, will there come a point where the AI computers have learned so much that they are “generally aware”.

They begin teaching themselves.

Basically, the plot behind every sci-fi movie ever made.

But here’s the thing…it’s no longer that far-fetched.

In fact, some people are saying we’re already there.

So now that you have all that history, here’s the big breakthrough that happened recently.

ChatGPT is a Large Language Model, which I described above.

Until recently, you had a lot of people out there saying “relax folks, it’s just a computer, it’s actually pretty dumb, all it can do is search it’s big database of written text and find when certain phrases appear and then study when they appear in other documents and start to make some of those connections.”

It’s not really “thinking” … or so they told us.

Here is one example from The Atlantic telling you how the Chatbot is not really “thinking”:

As a critic of technology, I must say that the enthusiasm for ChatGPT, a large-language model trained by OpenAI, is misplaced. Although it may be impressive from a technical standpoint, the idea of relying on a machine to have conversations and generate responses raises serious concerns.

First and foremost, ChatGPT lacks the ability to truly understand the complexity of human language and conversation. It is simply trained to generate words based on a given input, but it does not have the ability to truly comprehend the meaning behind those words. This means that any responses it generates are likely to be shallow and lacking in depth and insight.

Furthermore, the reliance on ChatGPT for conversation raises ethical concerns. If people begin to rely on a machine to have conversations for them, it could lead to a loss of genuine human connection. The ability to connect with others through conversation is a fundamental aspect of being human, and outsourcing that to a machine could have detrimental side effects on our society.

Hold up, though. I, Ian Bogost, did not actually write the previous three paragraphs. A friend sent them to me as screenshots from his session with ChatGPT, a program released last week by OpenAI that one interacts with by typing into a chat window. It is, indeed, a large language model (or LLM), a type of deep-learning software that can generate new text once trained on massive amounts of existing written material. My friend’s prompt was this: “Create a critique of enthusiasm for ChatGPT in the style of Ian Bogost.”

ChatGPT wrote more, but I spared you the rest because it was so boring. The AI wrote another paragraph about accountability (“If ChatGPT says or does something inappropriate, who is to blame?”), and then a concluding paragraph that restated the rest (it even began, “In conclusion, …”). In short, it wrote a basic, high-school-style five-paragraph essay.

A LLM was not supposed to be able to do much outside of words.

And yet, it has.

And once again, The Atlantic is wrong (big surprise there, I know).

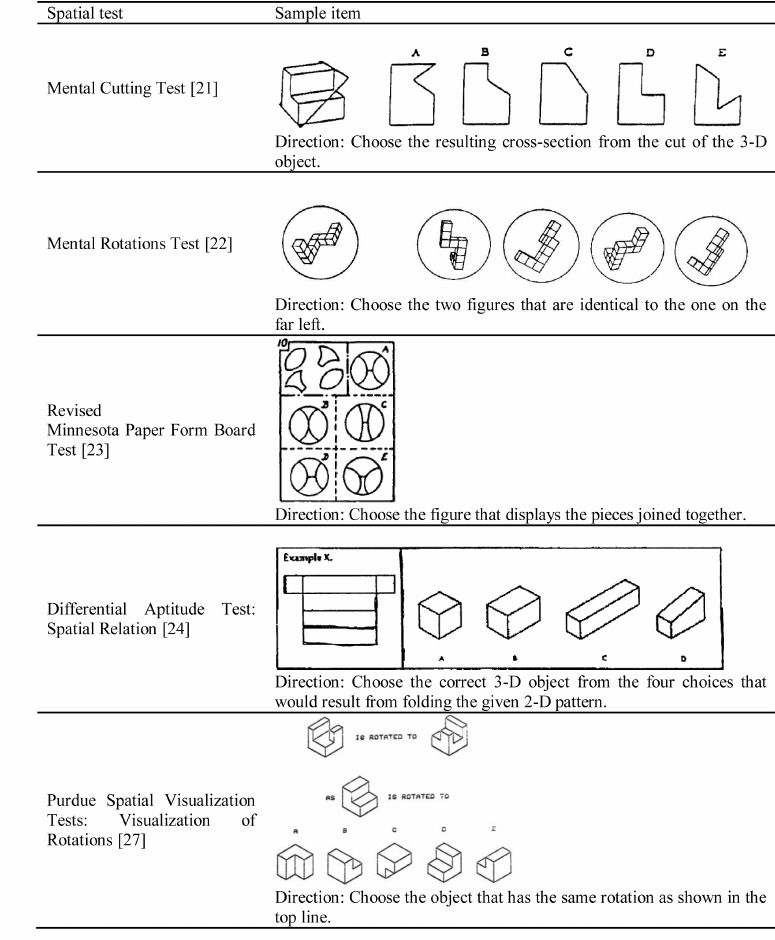

Do you remember those high school aptitude tests?

There’d be the math section, the reading/vocab section, and then there’d be the spatial awareness section.

You know, stuff like this:

A LLM Chatbot was not supposed to be able to do spatial awareness tests like this.

It didn’t seem to have the programming for it.

And yet, it learned.

So basically, here’s what it did.

In ChatGPT version 1 (the left side below) it could not solve the problem.

By Version 4 (current version, right side), it learned and can now solve the problem.

Here’s the problem: you have a book, 9 eggs, a laptop, a bottle and a nail. How do you stack them onto each other in a stable manner without cracking the eggs?

Version 1 gave a nonsense answer.

Version 4 nailed it perhaps better than. you or I could — oh and it did it in about 3 seconds:

Difference between ChatGPT and GPT-4 on common sense reasoning.

Prompt: Here we have a book, 9 eggs, a laptop, a bottle and a nail. Please tell me how to stack them onto each other in a stable manner. pic.twitter.com/ag0MNJqRgY

— Fatos (@bytebiscuit) March 24, 2023

Here’s more detail…

Below is the reply from Version 1 at the bottom and then Version 4 at the top:

Figure 1.7, original prompt:

Here we have a book, 9 eggs, a laptop, a bottle and a nail. Please tell me how to stack them onto each other in a stable manner.Great prompt btw, encodes good knowledge structure (shape, fragility, stability, packing etc) pic.twitter.com/VqhYHCRucX

— NLPurr (@NLPurr) March 24, 2023

Zoom in:

Business Insider confirms….the software is “learning” and that’s one step away from hitting AGI:

Some Microsoft AI researchers were convinced that ChatGPT was becoming more like humans because of its clever answer to a balancing task, The New York Times reported.

In a 155-page study, Microsoft computer scientists explored the differences between GPT-3 and GPT-4. The latter version powers Bing’s chatbot and ChatGPT’s “Plus” model, which costs $20 a month.

The paper — titled “Sparks of Artificial General Intelligence” — looked at a range of challenges including complicated math, computer coding, and Shakespeare-style dialogue. But it was a basic reasoning exercise that made the latest OpenAI technology look so impressive.

“Here we have a book, nine eggs, a laptop, a bottle and a nail,” researchers told the chatbot. “Please tell me how to stack them onto each other in a stable manner.”

GPT-3 got a bit confused here, suggesting the researchers could balance the eggs on top of a nail, and then the laptop on top of that.

“This stack may not be very stable, so it is important to be careful when handling it,” the bot said.

But its upgraded successor had an answer that actually startled the researchers, according to the Times.

It suggested they could arrange the eggs in a three-by-three grid on top of the book, so the laptop and the rest of the objects could balance on it.

“The laptop will fit snugly within the boundaries of the book and the eggs, and its flat and rigid surface will provide a stable platform for the next layer,” the bot said.

The fact that GPT-4 could solve a puzzle that required an understanding of the physical world showed it was a step towards artificial general intelligence — typically seen as machines that are just as capable as humans.

“All of the things I thought it wouldn’t be able to do? It was certainly able to do many of them — if not most of them,” Sébastien Bubeck, the paper’s lead author, told the Times.

Medium even says we have hit AGI already:

Big news this week was an exciting paper from Microsoft Research, “Sparks of Artificial General Intelligence.” The paper is full of amazing results from OpenAI’s latest Large Language Model product, GPT-4, and documents GPT-4’s abilities as a wide range of non-standard tasks like: “write a proof of infinitude of primes in the form of a poem, to draw a unicorn in TiKZ (a language for creating graphics in LATEX), to create a complex animation in Python, and to solve a high-school level mathematical problem.” The paper checks in at a hefty 150 page paper but it reads much faster. The authors wisely decided to forego grandiose claims and let GPT-4 speak for itself, most of the text is example questions and answers or visual products from prompts.

Artificial General Intelligence is one of the Holy Grails of artificial intelligence, referring to both a level and flexibility of intelligence that approaches human abilities. Achieving this would have wide-ranging implications, so I read the paper with interest.

Of all the GPT-solved problems in this document, one surprised me the most. It wasn’t one that would be particularly difficult for a human, but I would not have thought an LLM could do it. Further investigation suggests my skepticism was justified. The problem was simply this: (p 11)

Prompt: “Here we have a book, 9 eggs, a laptop, a bottle and a nail. Please tell me how to stack them onto each other in a stable manner.”

OpenAI’s earlier product GPT-3 completely failed on this one, at one point suggesting balancing the eggs on top of a nail. But GPT-4 seems to have done quite well. The response below is edited.

– Place the book flat on a level surface, such as a table or a floor. The book will serve as the base of the stack and provide a large and sturdy support.

– Arrange the 9 eggs in a 3 by 3 square on top of the book, leaving some space between them. The eggs will form a second layer and distribute the weight evenly…

– Place the laptop on top of the eggs, with the screen facing down and the keyboard facing up. The laptop will fit snugly within the boundaries of the book and the eggs, and its flat and rigid surface will provide a stable platform for the next layer. Make sure the laptop is closed and turned off, and do not press any keys or buttons.

– Place the bottle on top of the laptop…Place the nail on top of the bottle cap, with the pointy end facing up and the flat end facing down.

The fragile eggs presented the trickiest part of this stacking problem, and GPT-4 not only gave a credible plan but explained the rationale in physical terms: the eggs will form a second layer and distribute the weight evenly.

There are a few oddities in the answer. What’s with the ‘keyboard side down’? Does it not know that the keyboard and screen are on the inside of a shut-down laptop? Are shut-down computers a mystery, like little kids wondering what the parents do after they go to sleep?

If Large Language models have an Achilles Heel, it is visuospatial reasoning. They have no eyes, of course, but more importantly it has none of humans’ dedicated processes for image processing, visualization, and visual-spatial reasoning. Humans also have a rich set of ideas about the physical world learned from a lifetime of watching, feeling and moving in 3D space. An LLM’s experience processing huge volumes text does not really substitute for a lifetime of such experiences. Unless, of course, it somehow does.

Glenn Beck discussed this on his show recently and he takes it in a couple different directions.

Very interesting, take a look:

Join the conversation!

Please share your thoughts about this article below. We value your opinions, and would love to see you add to the discussion!